Would you live as an undocumented person for three months? Lucia Gallardo, Founder & CEO of Emerge, did just that. Her aim: to understand the problems they face. For context, Emerge is a tech lab that works at the convergence of sustainable development and social impact. Talk about deep diving into a project!

Very often, entrepreneurs come up with solutions that do not work for their target audience. The best way to mitigate this, says Dexter Zhuang, Product Lead, Merchant Experience, at Xendit, is “by asking the right questions. You can learn the real, tangible experience of your customers, and understand what makes their experience painful or desirable”.

In Lucia’s case, she worked with undocumented workers and displaced populations. Their biggest struggles are being able to cash their paychecks and access their savings. In an episode of Slaves to the Algo podcast, she expands on these issues, and talks about how tech is being used for social good.

The scope of possibilities in this sector is vast and growing every day. Here are a few ways the industry is pulling together to make lives better.

Breaking biases

Humans have biases. Humans train AI. AI learns from us and our interactions. As much as it imbibes values, it imbibes biases as well.

Dr. Joy Buolamwini, a graduate researcher at the Massachusetts, and the founder of Algorithmic Justice League says, “One of the major issues with algorithmic bias is you may not know it’s happening.” She realized this while creating the Aspire Mirror. The device allows users to see their reflection projected with something that inspires them, or something they empathize with. However, the program could not detect her face even after numerous debug attempts.

Joy decided to take things into her own hands. Literally. She drew a face on her palm. The program detected it instantly. Next, she did something she felt conflicted about. She wore a plain, white-colored mask lying in her office. Again, the recognition was instantaneous. This prompted her to dig further into the benchmarks used for facial recognition. Here’s what she gathered:

- One of the leading gold standards, named “Faces in the Wild” was composed of 70% male and 80% white.

- Heavily skewed data sets caused by the use of celebrities’ looks as standards. The faces of the rest of the world do not necessarily have to align with this ‘standard’.

Joy started to see something she calls “power shadows” — when either the inequalities or imbalances that we have in the world become embedded in our data. This is just the findings of one person. Imagine this on a global scale.

This is exactly what Affectiva’s Emotion AI tries to counter. In a 2021 update, they trained the algorithm with 50,000 unique face videos from a dataset of 12 million+ videos of people from 90+ countries.

- 50% of the data was composed of female face videos

- Over half the subjects were non-Caucasian

- Significant progress was made in the detection and analysis of facial expressions in older populations and those of African and South Asian origin

It’s the kind of inclusivity and diversity we like to see!

Healing with Big Data

While data can help us understand why certain medical emergencies occur, the insights they hold can mean the difference between life and death for millions of people.

Cardiovascular disease is now the world’s leading cause of death, costing 18 million lives a year. To address this burgeoning issue, we need large-scale data and actionable insights that can help experts come up with workable solutions. However, the required data is spread across nations, and is often scrambled and unusable.

To tackle this, Novartis Foundation, Microsoft, and Accenture have collaborated to establish AI4BetterHearts, the first global collaborative for cardiovascular health. Their sole aim is to analyze the data pertaining to cardiovascular disease, and derive useful insights from such data, while upholding privacy standards.

Having a centralized repository of data and analyzing it can help us know things like which cause ranks first, and which factor can be easily tackled, or where this happens due to lack of access to medical facilities. For example, a 2020 report published by WHO suggests that 53% of the 155 nations surveyed reported disrupted hypertension services. This was the cause of 31% of cardiovascular emergencies. Ensuring a viable system to ensure access could solve the problem to a great extent.

ARMMAN, an Indian non-profit, has been using AI to bring down maternity-related mortalities. Partnering with Google Research India, they launched mMitra, an AI-integrated program that provides counseling and guidance to pregnant women and new mothers.

This simple integration brought about a positive impact in program improvement, creation of targeted programs, and prediction of high-risk conditions and beneficiary behavior. The data showed

- 25% increase in pregnant women who took IFA tablets for 90 or more days

- 47.7% increase in proportion of women who knew at least 3 family planning methods

- 17.4% increase in proportion of infants who tripled their birth weight at the end of 1 year

Proof enough that AI can be put to life-saving work? We think so.

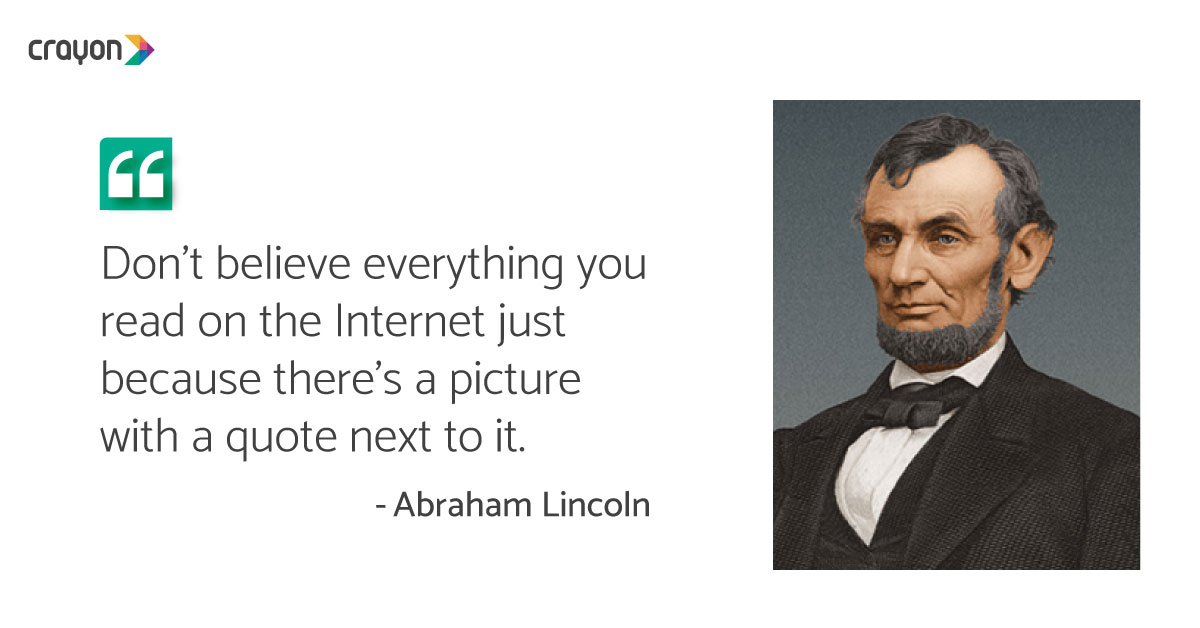

True or false: figuring out fake news

In recent years, the spread of fake data and information has led to devastating consequences. In the early days of the pandemic, for instance, hundreds of people died globally due to misinformation surrounding Covid-19.

Fake news is now a weapon. It is often wielded by forces who have vested interests. One of the major places it has found fans is in politics. Back in 2017, Vice.com explored how the social media behavior of the masses was used to direct the outcome of the 2016 Presidential Elections in the United States of America and the Brexit campaign in the United Kingdom. And even in 2023, it’s not going anywhere.

Logically.ai aims to avert such misuse of data and intercept them before they spread. Working with automated AI tools and filters, as well as expert analysts, they have defused several fake news claims successfully. Some examples:

- A viral tweet claimed that the Turkey/Syria earthquake on February 6, 2023, was not natural, but caused by High-frequency Active Auroral Research Program (HAARP).

- Conspiracy theorist Jerome Corsi claims in a video that a group of U.S. military generals planned to commit a coup against President Obama.

- The 2023 Indian budget announced financial support to undertrial prisoners who cannot afford bail. This was shared misleadingly as being earmarked for Muslim prisoners.

With such validation tech evolving rapidly, we’ll have to wait and watch if it can keep pace with the flow of misinformation. In the meanwhile, a good rule of thumb to have is below:

Yet, we’re still to hear a unanimous ‘Aye, aye’ for AI. That’s because like any new tech, it still has several challenges. Look at just the industries we’ve discussed here today.

1. There’s data, and then there’s daaaaaaaaattaaaaaa

When it comes to the healthcare industry, the sheer load of data to be processed, cleaned up, and analyzed is dumbfounding. The logical argument to that would be, “Why don’t you take a smaller set?” The problem with that is sampling a smaller size may not reveal the actual insights one may get by using the entire data set. The solutions formed with such a sample size may not be applicable to the entire population in the data set.

2. Diversity is more than just a feature

Many AI enterprises are so focused on making tech bridge the gap, that they forget to implement it at the enterprise level. A greater and more diverse sample set is just one tiny part of advancing emotional AI. A study reports that even in industry giants such as Facebook and Google, the population of women AI researchers are just 15% and 10%, respectively. Less than 5% of the workforce at Facebook, Google, and Microsoft are Black. With such a gap to be bridged at the grassroot level, it is not possible for the resultant AI to reflect the voice / vision of all.

3. AI vs AI

AI validating data? Great! But what about the bots being used to spread the wrong information? It’s not a game in which AI shoots down wrong information whenever it pops up. It’s rather a game where it has to shoot down multiple sources at once. What’s worse is that it’s sometimes a battle between 2 AIs – one designed to spread wrong information and the other trying to curb it. The world hasn’t always been fair to living beings. So why should it be any different for AIs?

Working for social good has never been easy, and it’s the same with social tech entrepreneurship. Juggling privacy policies, international affairs, technological viability, a steady revenue, and a strong urge to do good is no mean feat. We hope that the goodness such enthusiasts sow drives them to keep going despite the bumpy route they have chosen.