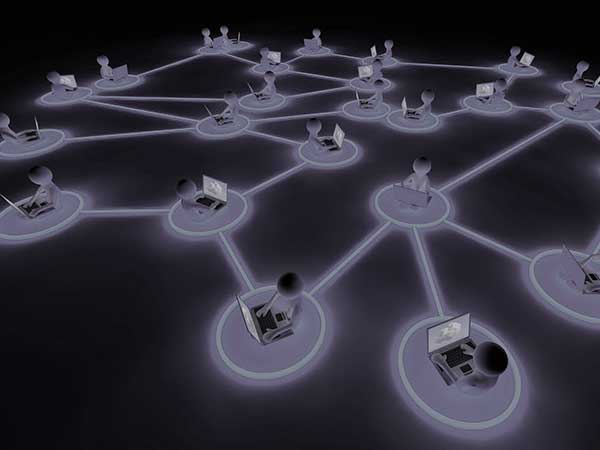

Almost every department and business function in the enterprise has a big data application. Consequently, the need to centralize and harness big data assets has led more organizations to move big data responsibilities and assets to the corporate data center. This is a departure from many initial big data deployments that were characterized by distributed pockets of big data in departments throughout the business.

The current movement of centralizing big data in the data center is predicated on the hope that IT can manage these big data assets, with end business users receiving maximum benefits. However, for asset optimization to work in a centralized scheme, job scheduling becomes a central concern — and a task that IT must do well. Scheduling of big data jobs is a multifaceted responsibility that has its technical, operational, and political aspects.

From the standpoint of a high performance computing (HPC) cluster, the goal of big data job scheduling is to process and complete as many jobs as possible. On the surface, this goal sounds similar to its transaction processing counterpart, but there are definite differences.

In big data and HPC, the cluster processing is done in parallel, and the technical goals are two-fold: (1) to have one “large” job running in the background while many shorter jobs are run (and completed) during the timeframe that the large job is running; and (2) to utilize upwards of 90% of the HPC CPU at all times, seldom encountering an idle moment.