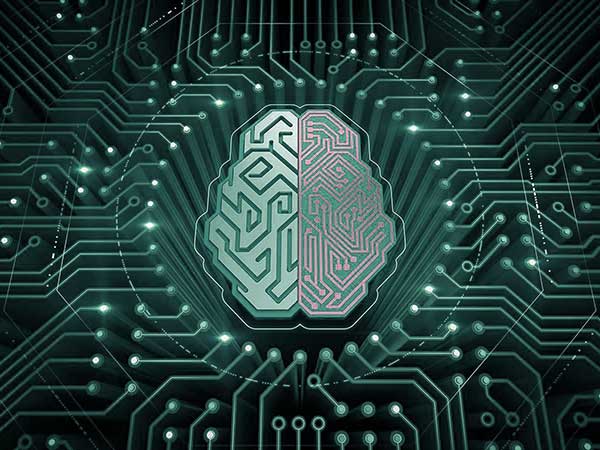

Robots are becoming more human-like faster than ever, thanks to the advances in Artificial Intelligence, especially deep learning. Take, for example, this recent blog release from Google where a team of researchers has been developing a mechanism for training a robot to grasp objects in a “non-robotic” manner. Until recently, it was a big challenge to program robots to perform human-oriented tasks, such as manipulating tools or navigating in unknown terrain.

Tasks included in the DARPA Robotics Challenge relied on collecting precise sensor observations and computing complex plans-of-action without the fluidity and flexibility that humans exhibit. Rather than rely on hand-crafted and highly engineered algorithms for performing these complex tasks, researchers have begun using deep learning techniques to create a feedback mechanism that allows the robot to learn, from trial and error, the best way to perform its tasks. Deep learning is a subset of machine learning that specializes in recognizing patterns in high dimensional and non-linear inputs through several feature-extracting layers called a neural network. For more information on Deep Learning, take a look at this previous blog post.

To demonstrate how we can use Deep Learning with a robot to emulate human behavior, let’s look at an example task: recognizing faces. Since we want the robot to be able to recognize faces in a real-world environment, we will need the robot to first be able to find a face around it using a camera, follow the face if it moves, and predict the name of the person’s face. This task, while simple for humans, poses two major challenges for a robot: coordinating all of the hardware and software components of the robot so that it reacts in a fluid manner, and ensuring that what the robot thinks is a face is actually a face and that it recognizes the name correctly.

To tackle the first challenge, we can simplify the problem by breaking the task up into distinct “nodes” or processes, as shown in Figure 1.

Figure 1. ROS node diagram of face detection, tracking, and recognition. Orange shade represents a Deep Learning process.

When programming the software for the robot, we use Robot Operating System (ROS), a software platform designed to simplify the communication between robot components, to convert each “node” into a piece of code and create a simple algorithm for the task:

- First, a camera node receives images from the camera and sends the RGB data to the face detector node.

- The face detector node runs a Deep Learning model across the pixels of each image and identifies the location of the face in the image.

- A cropped image of the face is sent to the face identifier node, which uses another Deep Learning model to identify the person’s name.

- The coordinates of the face are sent to the face tracker node which processes velocity commands for the motor on the camera.

- The motor on the camera executes velocity commands so that the face is always centered in front of the camera.

Now that we have the framework for the task setup, we will need to train two Deep Learning models: one to locate a face in an image and one to identify the person’s name. Training these models is similar to how we would train a person: we provide an image of a face, the model gives a prediction, we tell it if it is correct or not, and the model adjusts. The training images for the first model will be labeled with the coordinate location of each face, and the training images for the second model will be labeled with each person’s name. Once the models are trained, they are added to the algorithm programmed into the robot and we can get a result that looks like this.

What does this mean for your business?

Robots still have a long way to go to achieve the type of human-like behavior that we often see in movies, but with the recent advancements in Deep Learning, businesses should no longer be questioning if robots are relevant to their industry but when they will become relevant. Businesses have already begun to invest heavily in robots in order to automate tasks that have historically been reserved for humans, such as ride-sharing services using autonomous cars and inventory management in warehouses using drones. As research in Deep Learning continues to accelerate, we will begin to see examples of businesses using emotion and speech analysis for direct communication and interaction between robots and customers.

For businesses contemplating the applications of robots, here are some questions to consider:

- What tasks could robots perform that human employees can’t do or wouldn’t want to do?

- How could your customer interactions be automated using a robot?

- What important information could a robot collect from these interactions?

- What are the cost savings of automating the management and transportation of physical assets?

This post has been co-authored by Paul Gigioli.